Job Settings

This article explains the settings available in the side panel when you click a Job.

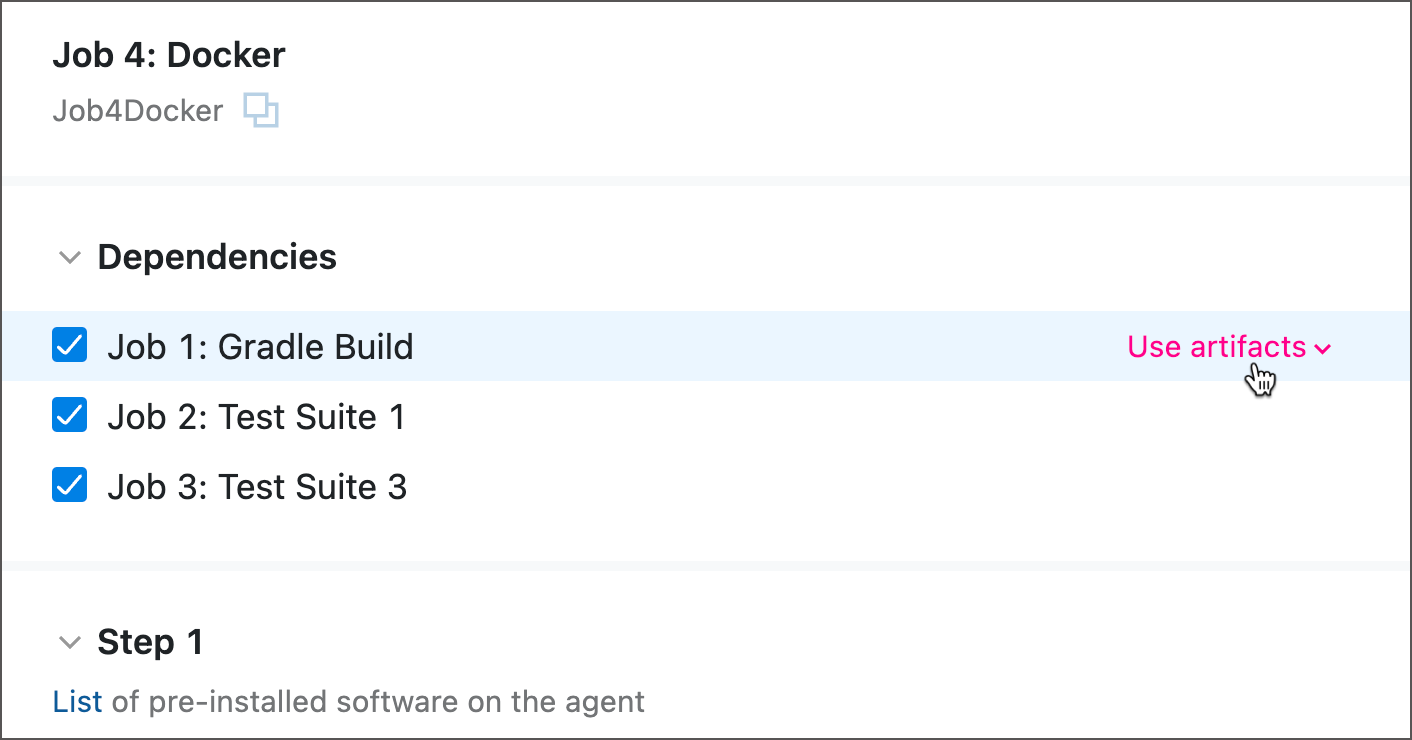

Dependencies

This section allows you to specify the Job order and exchange published files between Jobs.

Tick a checkbox next to the name of a Job that should precede the current Job. The visual graph will reflect this updated Job order.

In YAML, Jobs are arranged according to the execution order.

If a preceding Job publishes any artifacts, a dependent Job automatically downloads these artifacts to its own working directory. This technique enables a hassle-free file sharing and allows you to quickly set up delivery pipelines (for example, a file generated by a building Job A can then be published by a separate Job B).

If you do not want a dependent Job to obtain artifacts from its predecessor, choose the Ignore artifacts option in the Use artifacts pop-up.

Dependencies and artifact import rules can also be customized using the interactive Visual Editor.

Optimizations

This section contains settings that can affect your Job performance. See this help article for more information: Pipeline Optimization.

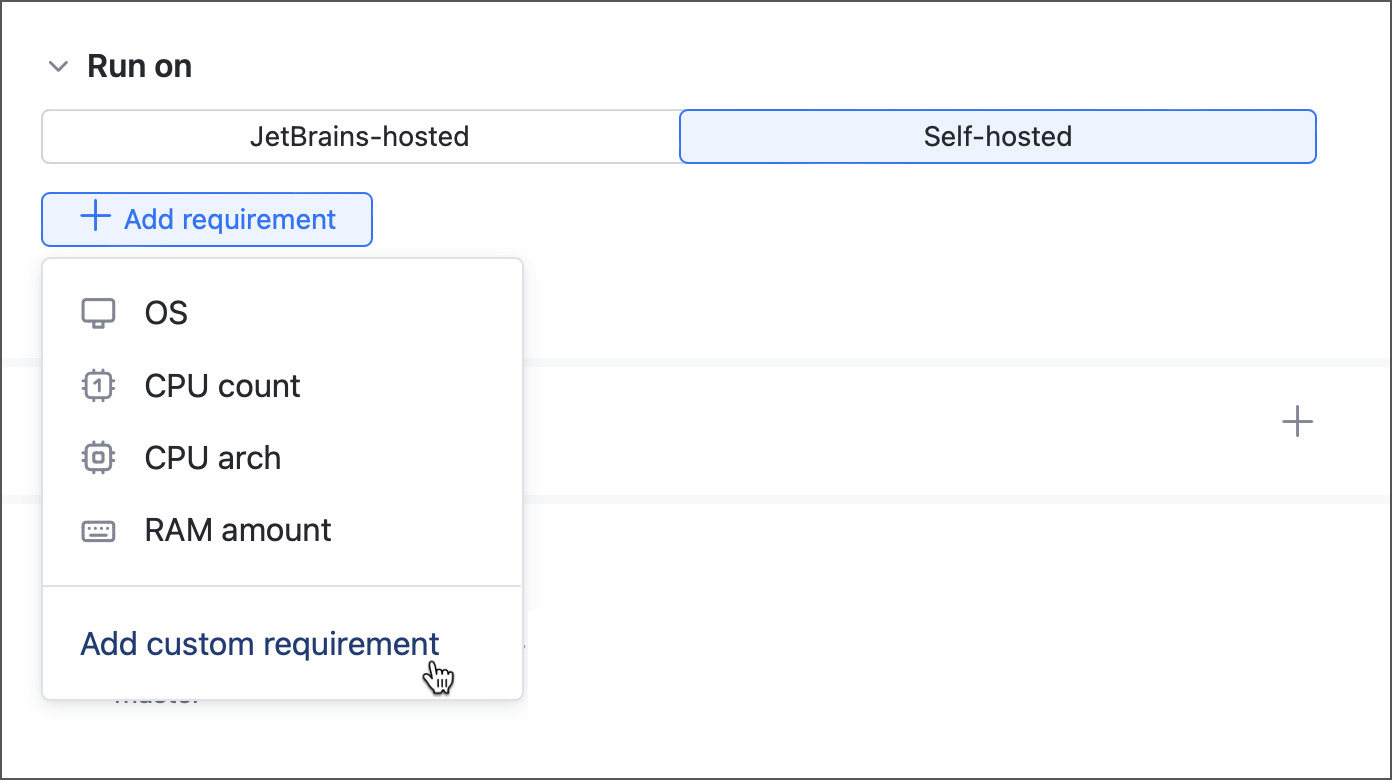

Run On

An agent is a piece of software that listens for the commands from the building server and carries out the actual build processes. TeamCity Pipelines offers two agent options:

JetBrains-hosted agents are cloud agents that are configured and maintained by JetBrains. They are started on-demand when a Job needs to be run and halted after a certain delay when the build queue is empty. This option is immediately available for any new Pipelines instance. Click the "Available software" link to learn more about different types of JetBrains-hosted agents.

The Self-hosted tab allows you to utilize custom machines connected to the Pipelines server. See this article for more information: Self-Hosted Build Agents.

Parameters

Parameters are name-value pairs. Using aliases instead of raw values allows you to:

reuse values across build steps;

modify build behavior by changing parameter values without editing actual scripts or settings;

hide raw values for clarity or security.

When TeamCity encounters the %parameter_name% syntax, it inserts the corresponding parameter value instead.

Parameter Levels and Scopes

You can define a parameter on a Job or Pipeline level. Job parameters are available only for this Job, whereas Pipeline parameters can be used by all of its child Jobs.

Pipeline parameters are mainly designed to be referenced in Pipeline, Job, and Step settings. For example, you may store the name of a default branch in a Pipeline parameter.

Job parameters are typically used inside build scripts. For that reason, TeamCity automatically adds the

env.prefix to Job parameter names when you create them in the UI. This turns a Job parameter into an environment variable, accessible on the agent machine by any building tool.

The sample below illustrates a difference between a regular parameter and an environment variable: job_parameter is available on the agent machine directly, and so its value can be printed by a script. In turn, pipeline_parameter exists only in TeamCity and can only be reported via a parameter reference.

This default logic is not the one and only way to declare and use TeamCity parameters. You are free to add env. prefixes to Pipeline parameters and remove them from Job parameters.

See the Output Parameters section below to learn how to expose Job parameters to downstream Jobs of the same pipeline.

Job Outputs

This section allows you to expose parameters and files that can be used by downstream Jobs.

Files

Output files are typically used for two main goals:

share files produced by a Job (Docker images, .jar files, libraries, NuGet packages, and so on) with other Jobs;

publish these files to the build Artifacts tab so that other users can download them from a server.

The sample below illustrates to set up and utilize output files. Job1 shares two files it generates in its build script:

The downstream Job2 imports these files and can use them in its own build actions:

Note that in YAML, both files are explicitly mentioned under the dependencies section. In TeamCity UI, you need to select Use artifacts when adding Dependencies: all output files of a selected Job will be available at once.

See the following article for the example: Create a Multi-Job Pipeline.

Output Parameters

Job-level parameters are designed to be used only within this Job itself (for that reason, TeamCity automatically adds the env. prefix to Job parameters created in the UI). Downstream Jobs have no access to these parameters and cannot use them in their own build scripts.

However, you can use output parameters to expose native Job parameters. For example, the sample Job1 uses the env.J1P parameter. If this parameter value in some shape or form is required by downstream Jobs, you can create an output parameter.

A downstream Job2 can now use this value in its own routines via the job.<upstream Job name>.<output parameter name> syntax:

In the sample above, the env.J1P parameter is directly referenced in the J1_OUTPUT parameter. In real-life scenarios however, Job-level parameters are used to calculate other values, and these values need to be shared with downstream Jobs. To do so, create an empty output parameter and print the special ##teamcity[setParameter name='parameter_name' value='calculated_value'] service message to set this parameter during a build run.

For example, the following sample Job calculates the date using its internal env.DATE_FORMAT parameter. This parameter makes sense only in the scope of this Job and does not need to be shared. Instead, we want to share the final date value. To do this, the setParameter command assigns a calculated value to the J1_DATE output parameter.

A downstream Job can now consume this calculated value and use it in its own build steps.

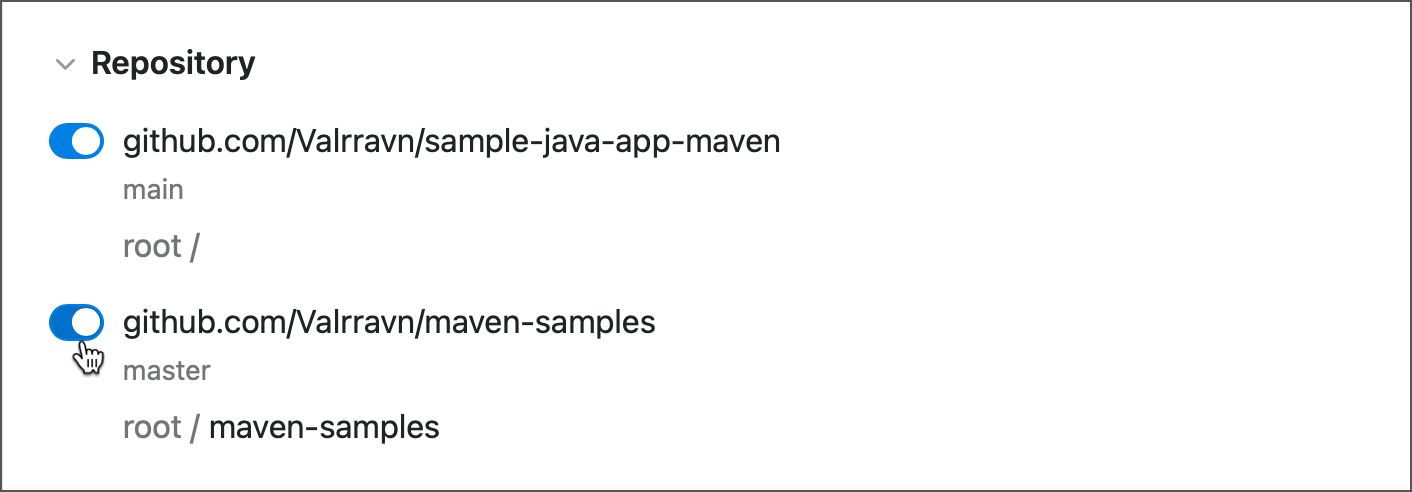

Repositories

If you set up more than one Repository in the Pipeline settings, use toggles in this section to choose which of the repositories the current Job should check out.

Note that all repositories except for the initial one are checked out to individual subfolders. Steps that use source files of these repositories should have their Working directory settings pointing to the same subfolder.

Integrations

This section allows you to enable and disable Docker and NPM integrations set up in the Pipeline settings.

You can also create new integration directly in Job settings. These integrations are initially unavalable for other Jobs inside the same Pipeline.